GPT-5: Slowing AI progress (and chart crime!)

GPT-5 is cool, but progress is obviously slowing and the announcements are FULL of misleading charts to hide this

The jump from GPT-2 to GPT-3 was world-changing. GPT-3 to GPT-4 was another impressive leap in functionality. So it was only natural that GPT-5 has been teased and hyped for some time now. From OpenAI’s announcement:

GPT‑5 is a unified system with a smart, efficient model that answers most questions, a deeper reasoning model (GPT‑5 thinking) for harder problems, and a real‑time router that quickly decides which to use based on conversation type, complexity, tool needs, and your explicit intent (for example, if you say “think hard about this” in the prompt). The router is continuously trained on real signals, including when users switch models, preference rates for responses, and measured correctness, improving over time. Once usage limits are reached, a mini version of each model handles remaining queries. In the near future, we plan to integrate these capabilities into a single model.

It’s only been a day, but I (and much of the internet) are impressed, but not that impressed. See this post from the OpenAI subreddit for example:

Slowing progress

GPT-5 is a step-wise change, not an order of magnitude.

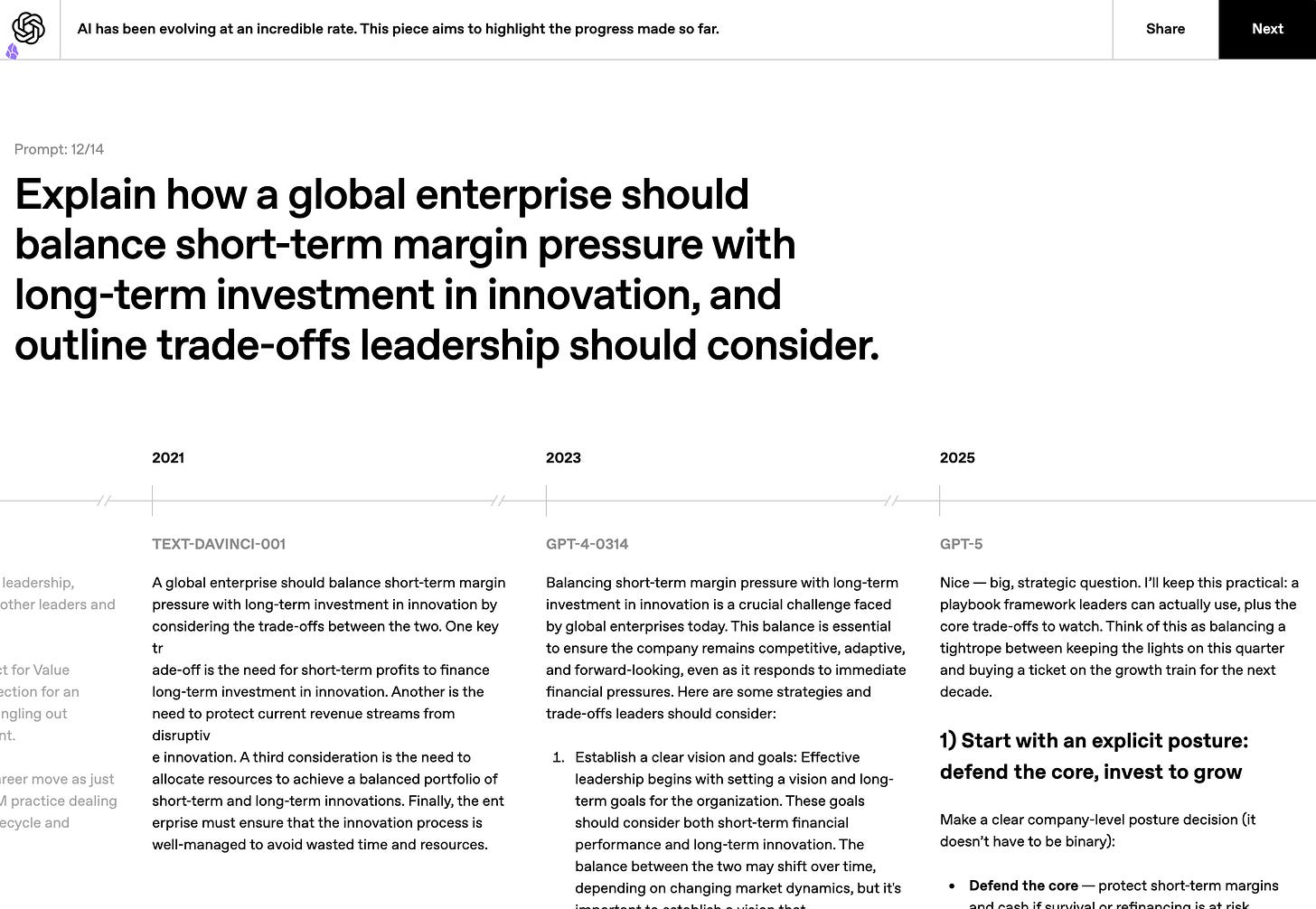

One really interesting way you can see this directly is OpenAI’s interactive tool meant to show differences in models with the same prompt:

You’ll notice HUGE leaps between models up until GPT-5. It looks like some sort of wall was hit, though it’s clearly still an improvement (just an incremental one).

The announcement included callouts that “benchmarks aren’t everything” which kind of speaks for itself.

Insane amounts of chart crime

The weirdest part of this launch for me is the incredible lengths they went to conceal the slowing progress with chart crime.

Chart crime: the deceptive or misleading presentation of data in a chart, often with the intent to manipulate or misinform viewers

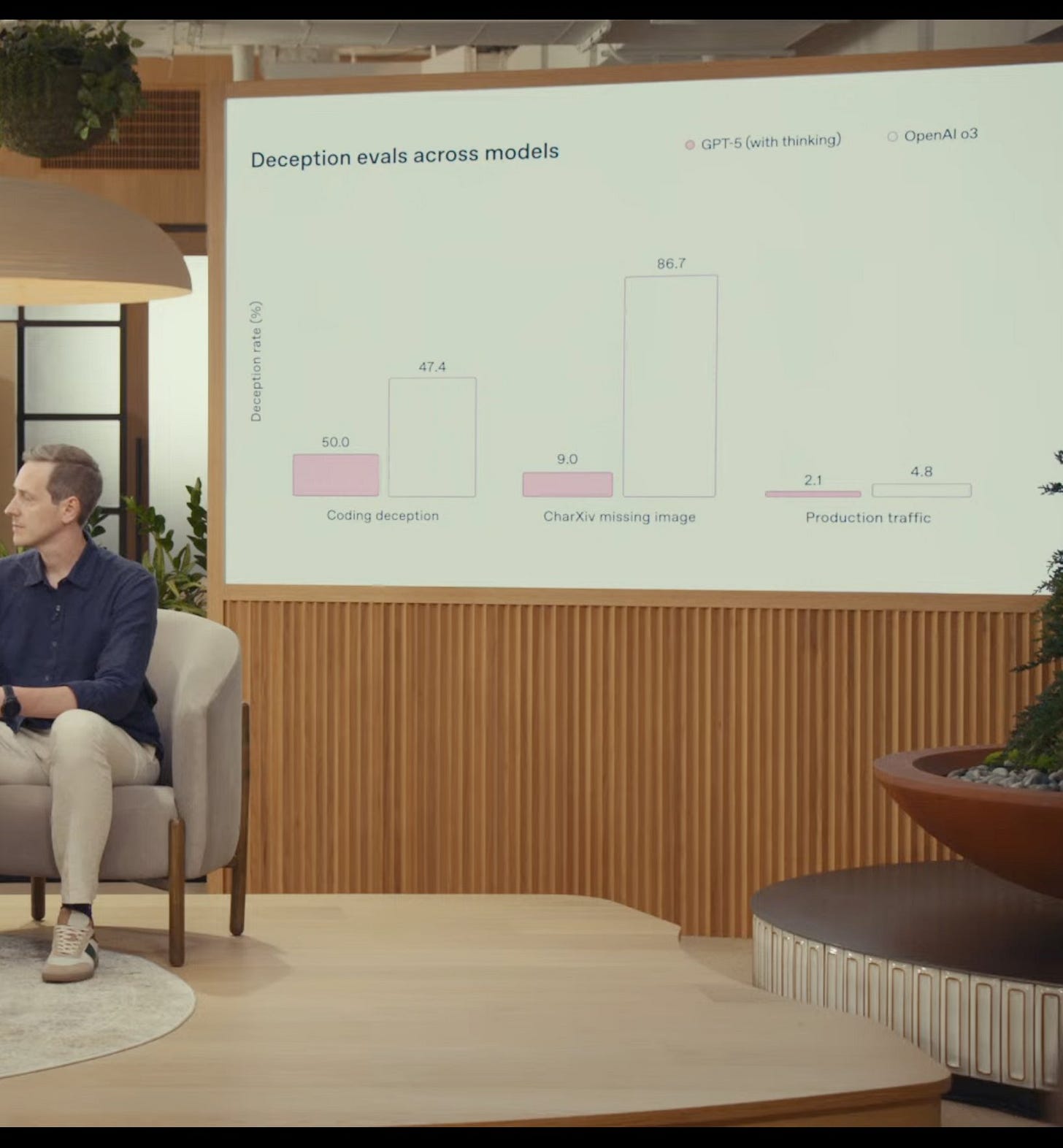

Take the “deception score” for example. GPT-5 actually scores higher than o3, but that’s not what this graph suggests:

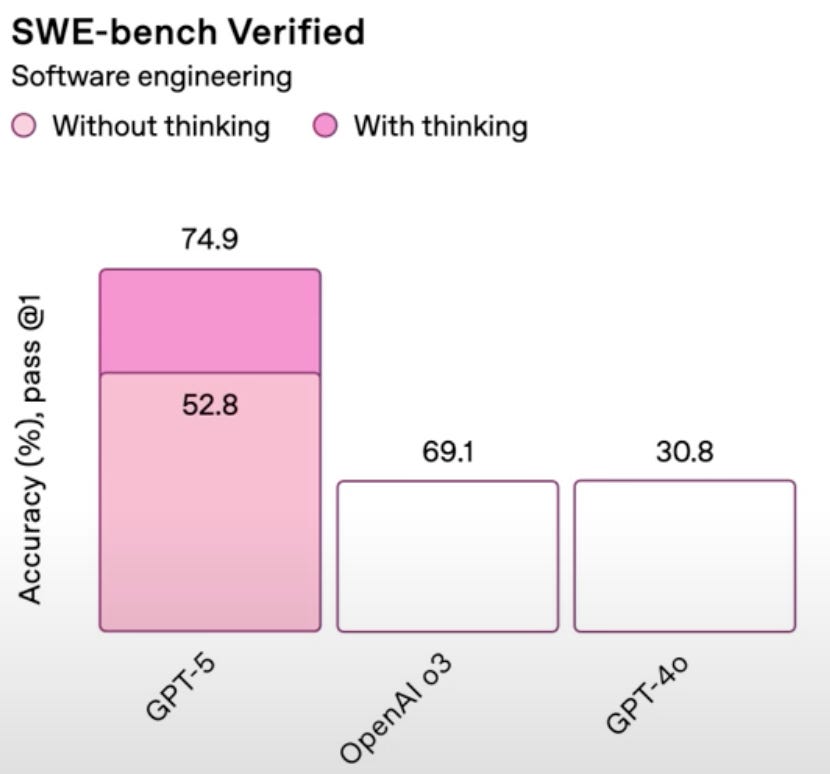

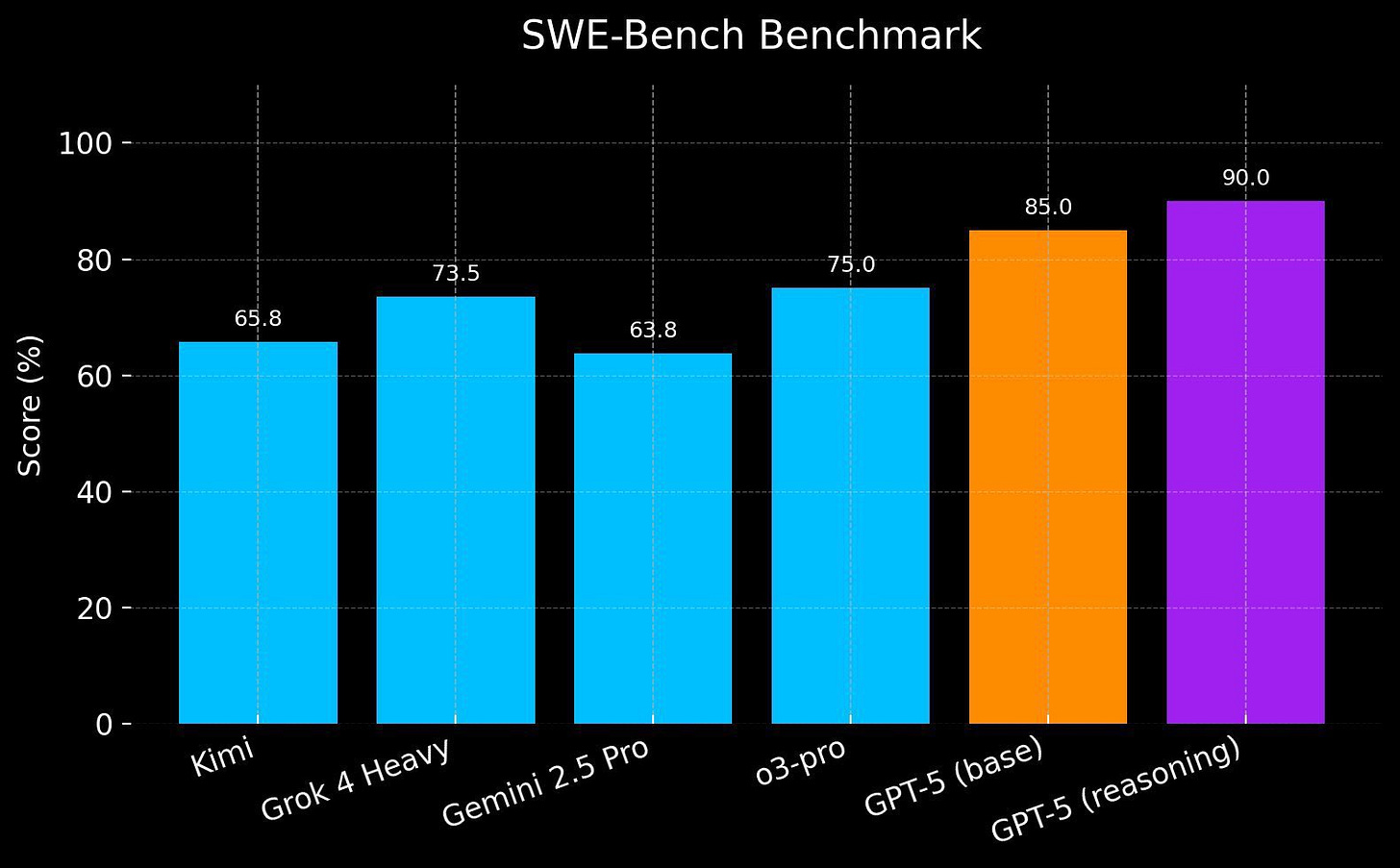

Even the SWE-bench chart is manipulated:

What this means for developers

Speaking of SWE-bench, you might be wondering how this affects software engineers.

The jury is still out, but GPT-5 with thinking is a clear improvement over even o3.

It’s such an improvement that I think it’s safe to call this benchmark saturated.

I’m going to make it my daily driver for a while in Cursor before giving a full opinion, but I’m optimistic.

Here’s what OpenAI had to say about the model’s coding improvements:

GPT‑5 is our strongest coding model to date. It shows particular improvements in complex front‑end generation and debugging larger repositories. It can often create beautiful and responsive websites, apps, and games with an eye for aesthetic sensibility in just one prompt, intuitively and tastefully turning ideas into reality. Early testers also noted its design choices, with a much better understanding of things like spacing, typography, and white space.

If this is particularily interesting to you, I ecourage you to read the coding writeup that shipped alongside the announcement.

If you’re new to the newsletter, consider subscribing to get more reports like this one (alongside more detailed guides). Below is a great place to start.

Start here: Welcome to The Augmented Engineer

Here’s the deal. AI is changing what it means to be a software engineer. I say this as someone who writes software for a living.