Anthropic says software engineering is done (it's not)

How to thrive in the AI-assisted era of software development

Eight months after Anthropic’s CEO predicted AI would write 90% of code in 3–6 months, Anthropic engineer Adam Wolff is declaring “software engineering is done”.

His tweet comes alongside the announcement of their new Claude Opus 4.5 model. Unsurprisingly, he predicted that perhaps “as soon as the first half of next year,” we’ll trust AI-generated code like we trust compiler output, no longer even checking it by hand.

Is this hype, or a glimpse of our near future? My opinion is that it’s more hype than reality, but that doesn’t mean software engineering isn’t seriously changing. If you haven’t been paying attention, tools like Cursor and Claude Code, alongside state of the art models, are reshaping the day-to-day of plenty of engineers.

So what exactly has changed? And what does it take to thrive today? Let’s dig in!

Strategy matters more than syntax

For most of the history of the profession, being a software engineer meant spending most of your day grinding out syntax. Carefully crafting loops, fixing type errors, and remembering API specifics occupied a lot of space in my brain even 2 years ago. Today, high-quality AI autocompletion has made remembering raw syntax far less relevant.

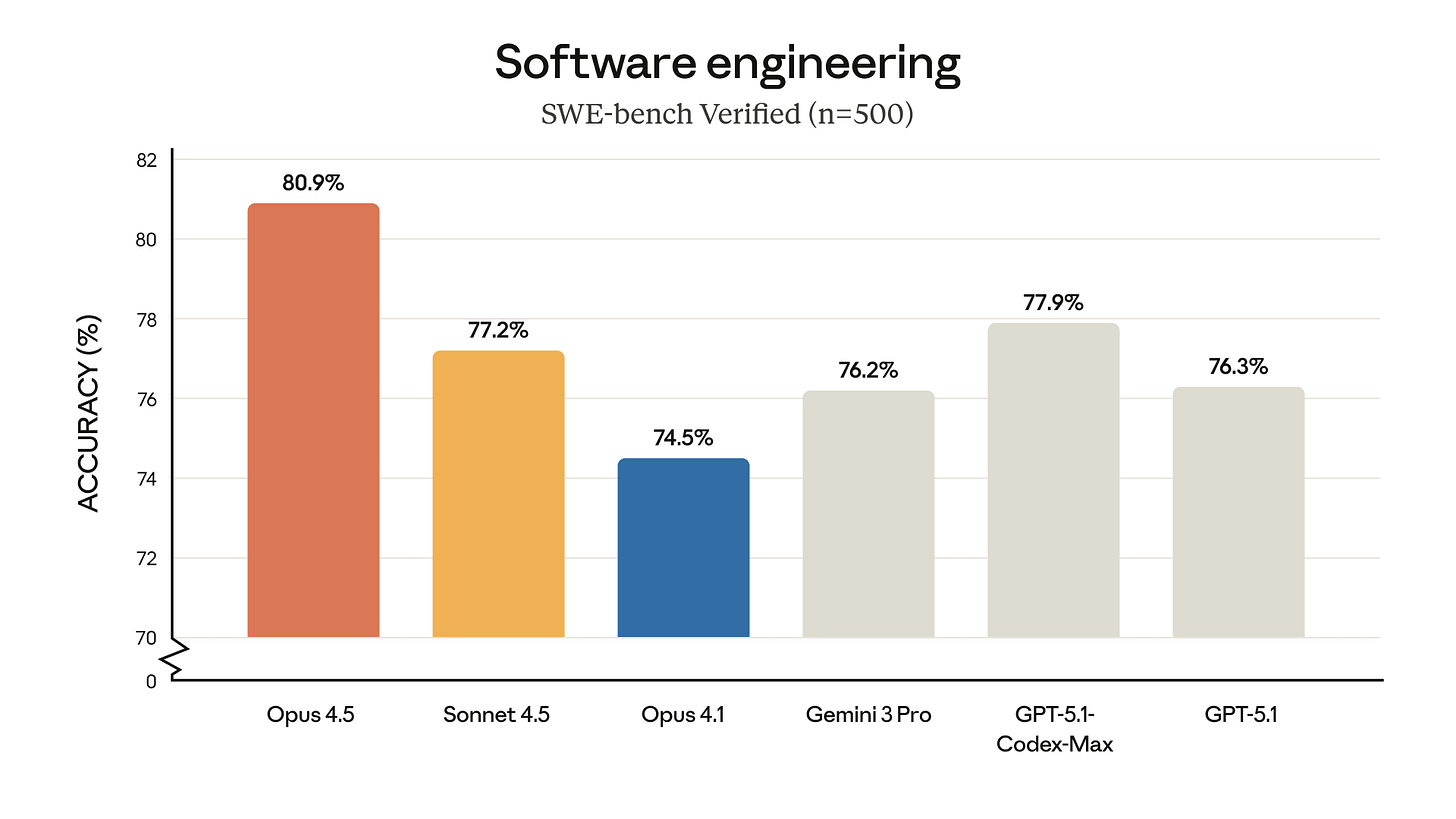

Opus 4.5 shows that even the best benchmarks are becoming completely saturated by state of the art models.

Large language models (LLMs) like GitHub Copilot and Claude can generate idiomatic code for you given a comment or function name. This didn’t make software engineers useless, but it did raise the bar on what each engineer is expected to deliver. It’s been my experience that we’re expected to think at a higher-level now.

The focus has shifted from writing code to defining the problem and architecture.

We already know that “real software work is more than making code compile”. The creative, conceptual work remains squarely in the human domain, and I’m skeptical (though preparing) that AI will surpass us there.

In practical terms, this means syntax knowledge alone won’t set you apart as an engineer. The new breed of AI copilots can generate correct Python or Java syntax with insane accuracy. What they can’t do is decide why that code should exist at all. Forward-looking engineers are investing more time in learning system design, user requirements, and high-level architecture, because those skills complement the AI. In short, AI can handle writing the code, but it can’t (yet) figure out exactly what code needs writing in the first place.

🔥 Ditch the vibes, get the context

Today’s newsletter is sponsored by Augment Code!

Augment Code’s powerful AI coding agent meets professional software developers exactly where they are, delivering production-grade features and deep context into even the gnarliest codebases.

With Augment Code you can:

Keep using VS Code, JetBrains, Android Studio, or even Vim

Index and navigate millions of lines of code

Get instant answers about any part of your codebase

Build with the AI agent that gets you, your team, and your code

Ditch the vibes and get the context you need to engineer what’s next.

Agents have taken us beyond autocomplete

If 2023–2024 was the era of AI autocompletion, 2025+ is the era of AI agents. In fact, Karpathy is preaching that this is the decade of agents. Early coding assistants like Copilot felt like autocomplete on steroids, but always with the human guiding step by step. Now we’re seeing tools that attempt to handle whole tasks autonomously, following high-level instructions.

Anthropic’s new Claude Opus 4.5 model was explicitly built for this agentic paradigm. It’s touted as “the best model in the world for coding, agents, and computer use”.

In an internal demo, Claude Opus 4.5 reportedly fixed a complex multi-system bug with minimal hand-holding, succeeding at a task that had stumped engineers using the previous model. Early users commented that Opus 4.5 “just ‘gets it’” when pointed at a tricky problem. It can “pause and think” mid-problem and decide on a strategy, something even I struggle to do on long days 😬

Google’s Gemini 3, also released in late 2025, follows a similar theme. Google calls it their “most powerful agentic…model yet,” built on “state-of-the-art reasoning” capabilities.

All this means the dominant writing pattern for coding is shifting. Where we once had a single text box that you, the developer, typed code into (with maybe some autocomplete help), we now have agent interfaces. You might describe a feature or bugfix in natural language, and the AI agent will go off to implement it.

For example, developers using Cursor’s AI code editor can now spin up multiple agents to tackle different tasks concurrently, all from a sidebar interface (more on that next).

And with tools like Claude Code, you can ask the AI to not just generate a snippet, but to “debug this program” or “refactor these modules,” and it will autonomously carry out a sequence of steps to get it done. This agent-based workflow is a profound change in how software is built.

Parallel agents feel like being a manager

One key innovation raising the ceiling for individual productivity is parallel agent orchestration. In traditional coding (or even with older copilots), an engineer works on one problem at a time. But what if you could deploy a team of AI agents to work simultaneously on different components of a project? That’s exactly where tools like Claude Code and Cursor are pushing people.

Meanwhile, Cursor (the AI-powered IDE) rolled out a multi-agent interface in version 2.0 that lets you run “up to eight agents in parallel on a single prompt.”

In Cursor’s sidebar, you can launch several agents, each operating on its own isolated copy of your repository, to prevent them from stepping on each other’s changes. For example, if you have a list of feature requests or bugs, you could dispatch one agent to implement the UI, another to update the backend API, and a third to write new unit tests.

The IDE uses techniques like Git worktrees or cloud sandboxes so that each agent’s file edits don’t conflict. As the human in the loop, you can monitor progress and then merge the changes once all agents finish.

The impact of parallel AI orchestration is that one engineer can tackle problems that were once the domain of small teams. Tasks that are embarrassingly parallel (like migrating similar code in dozens of files, or researching several alternative approaches) can be divided among sub-agents, drastically reducing wall-clock time to completion.

Of course, orchestrating many agents introduces new challenges: you have to ensure they don’t duplicate work or produce inconsistent outputs, and you must integrate their results. But those are high-level coordination problems that are solvable with practice.

You’ll have to choose more output or less work

With AI agents capable of writing and fixing code, one might imagine the life of a software engineer getting easier, but the opposite has been my experience. It’s tempting for some to think they can now slack off and let the AI do the heavy lifting.

Ambitious engineers are using AI to multiply their output, rather than coast. In an environment where everyone has access to these superpowers, the expectations simply rise.

AI is a force multiplier. Companies are keeping headcount and simply expecting each person to deliver more ambitious systems in the same time. If you coast, you stagnate; if you harness the AI, you can stand out by building larger, more impactful systems than ever before.

It’s also worth noting that entry-level programming tasks are being eaten alive by AI. Things like writing basic CRUD endpoints, converting JSON, and writing unit tests are now one-click operations in AI-assisted IDEs.

Google’s CEO recently revealed that over 25% of new code at Google is machine-generated, with human engineers shifting to a reviewer role. The junior-level work is often done by the AI, and a human ensures it fits the requirements and quality standards.

The path to staying relevant is to elevate your contribution above what the bots can do.

In practical terms, you should be actively looking for ways to let AI expand the scope of your work.

The role of a software engineer is evolving

So, is “software engineering done”, as the tweet provocatively claimed?

Not quite. It’s evolving. The Claude Opus 4.5 demo and others like it show that coding tasks can be done at blazing speeds with minimal human input. But building software was never just about typing code.

Yes, AI models are writing an increasing share of our code. And yes, the productivity leaps are real.. But seasoned engineers also point out that we have heard grand predictions of “coding is dead” before, and yet demand for skilled engineers remains high.

The truth is, AI is reshaping what it means to be a software engineer As long as there are new problems to solve and systems to build, we’ll need human creativity and oversight in the loop.

To stay effective in this new era, embrace lifelong learning and adaptability. Keep up with the latest AI capabilities (they’re arriving at breakneck speed – Gemini 3, Claude Opus 4.5, GPT-5.1 all dropped within a 12-day span)

But also double down on the fundamentals that won’t change: understanding user needs, architecting robust systems, and thinking critically about technology’s impact.

Hard agree that these developments shift focus to architecture and what I would summarize as product management. Engineers with product skills will have even more of a leg up (it was always a useful skill).

I would also say the goal of software engineering was never to write code. It's actually to solve a user's problem with the minimum amount of code. It's more expensive to maintain code than to write it. I don't have a good intuition yet how this changes with AI assistance. Current coding models seem to have a bias towards writing more code and I regularly trim model output by a lot. Maybe this verbosity won't matter in the long run if the AI is maintaining the code, who knows.

Great breakdown. This really highlights how AI is amplifying engineers’ impact without replacing the creativity and strategy that only humans bring.

I talk about the latest AI trends and insights. If you’re interested in practical strategies for thriving alongside AI in software development, check out my Substack. I’m sure you’ll find it very relevant and relatable.